Why did we make this project?

This project was developed in 2017 as part of the City University of New York Research Scholar Program. Led by Tahir Mitchell and Yahdira I. under the guidance of Professor Niu, the purpose of the research was to collect selfie images from the Flickr photo-sharing website and analyze facial gestures exhibited in images from different regions around the world. The team aimed to explore possible correlations between the demographic information of the individuals in the images and their facial gestures. Our work was inspired by the research of Souza et al. [2015] in "Dawn of the Selfie Era: The Whos, Wheres, and Hows of Selfies on Instagram," but differed in that it was conducted entirely online and was accessible to anyone with an internet connection.

Since the original development of the project, there have been four additional versions, each representing an improvement in the illustration of this research. Our goal was to find a solution that was cost-effective, that provides accurate data, and required fewer transactions between API endpoints.

Statistics

Total number of images searched: 1,296,634

Total number of faces calculated: 187,304*

Not every image contains a face. *

The many attempts of this project

In version 1 of this project, users were able to search for images on Flickr using a specified set of criteria, and the resulting images were displayed in a grid layout. However, there were some limitations to this approach. For example, if users clicked on multiple images too quickly, the page would fail. Additionally, the process of manually clicking on each image to be processed through Faceplusplus was cumbersome.

To address these issues, version 2 was developed. This version automated the process of retrieving images from Flickr and evaluating them in real-time using Faceplusplus. While this version did improve upon the previous one, there were still some limitations. For example, the query rate for Faceplusplus sometimes prevented multiple users from sending images simultaneously for evaluation. In addition, there was inconsistency in the results for the same keyword and location. To try and address this, we implemented JavaScript promises, but they only worked sometimes. In this version, we also added the ability to compare countries.

In version 3, we sought to address the limitations of the previous versions. To do this, we rewrote the project using Gridsome, a static site generator. This allowed us to have a static site with consistent data. We also developed a backend to fetch images from Flickr and process them using an open-source face recognition library called Deepface. To protect user privacy, we implemented an in-memory file system to prevent any history of user images on our servers. However, we encountered an issue where Deepface did not return the "adequate" emotions and demographics data that we were seeking. As a result, we searched for a better library or service to use. In this version, we also implemented Amcharts to illustrate the data from our database.

In version 4, we focused on improving the data illustration, website performance, and data consistency. To achieve this, we rewrote the website using Gatsby, which provided better performance. We continued to use Amcharts to display our data, and added statistical tests such as the Independent T-test and the ANOVA test to test our hypothesis. We also tested using Faceplusplus as our primary face analysis tool and kept Deepface as a secondary tool for detecting ethnicities. This version eliminated the rate issue found in the previous versions by only allowing one computer to use the endpoint. Future developments include the addition of Point of Interest images."

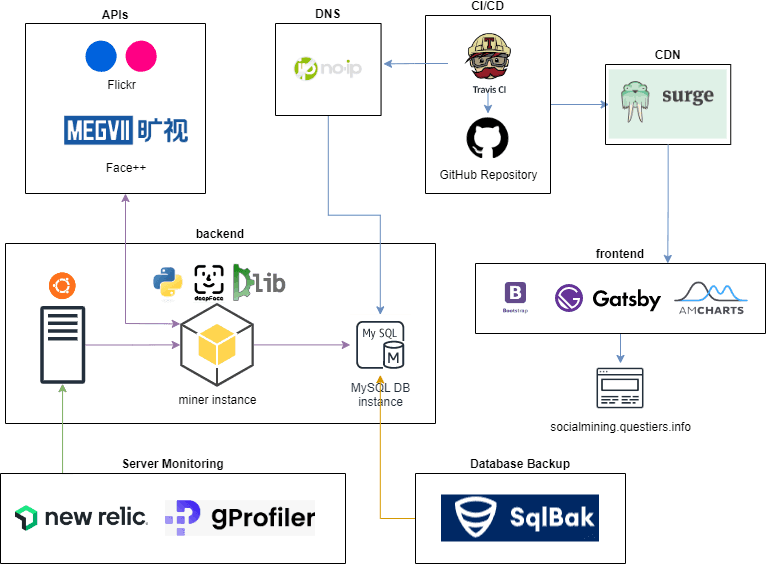

Architecture Diagram